This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Security

Establish Best Practice to protect the confidentiality and integrity of data

Security speaks for itself and as Werner Vogals says “security is job zero”. In this pillar not only do we look at the data you are storing and encryption but we will consider labeling and identifying your data, marking it as sensitive if needed.

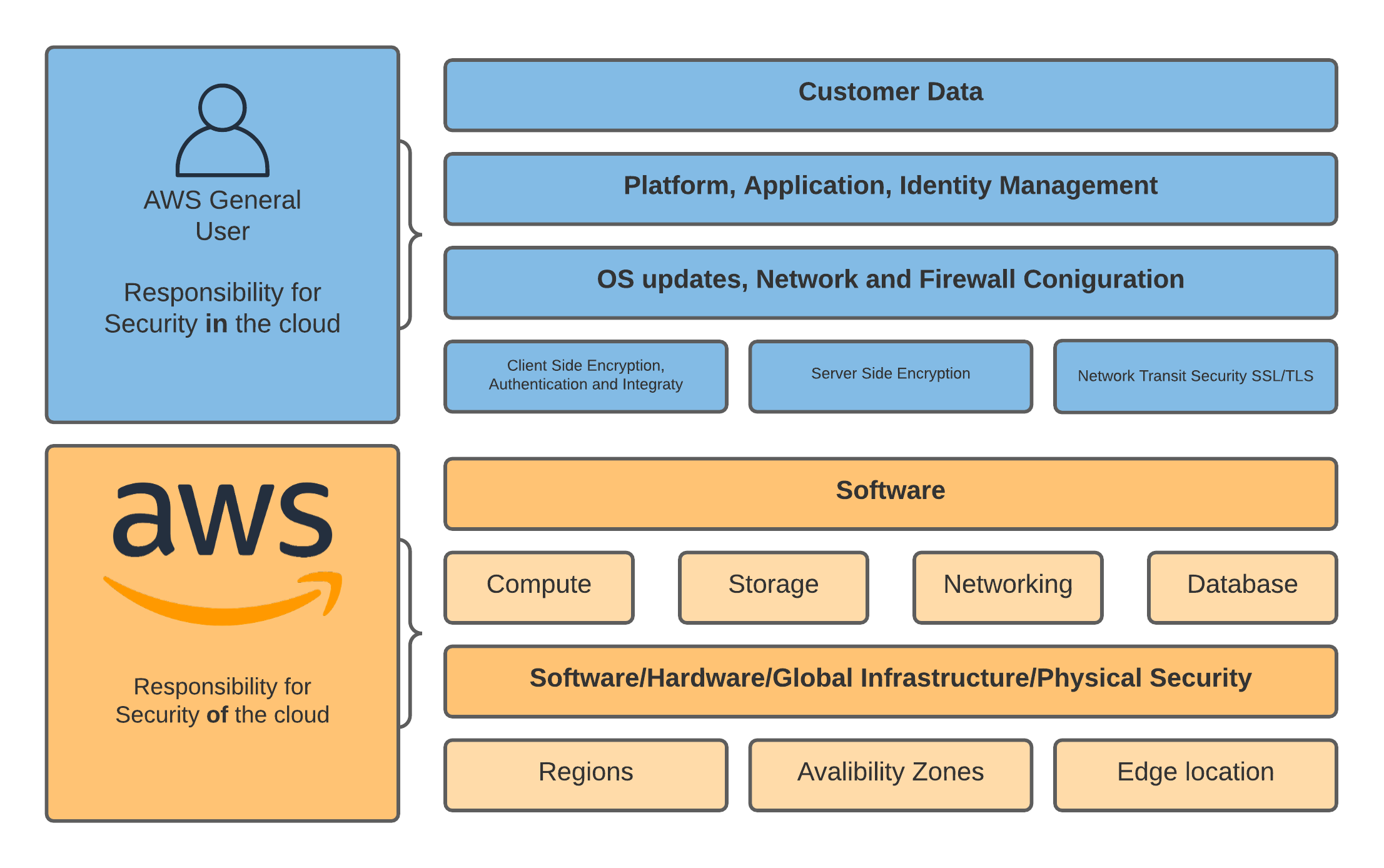

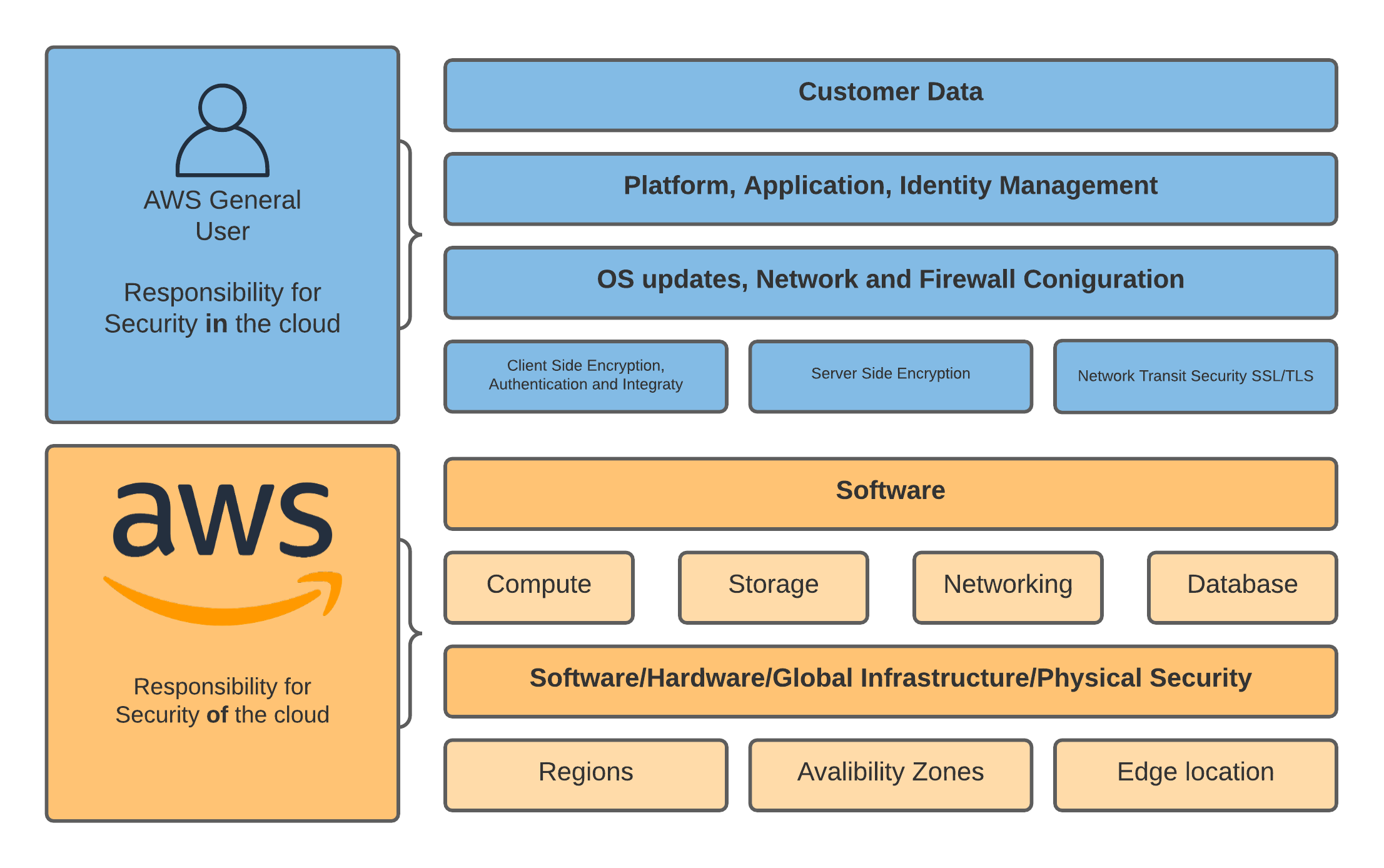

Shared Responsibility

Before we get going lets talk about shared responsibility. AWS is responsible for the running of the cloud, you are responsible for whats running in the cloud. If you think of this in terms on EC2, when you launch a server it’s running on hardware, amazon looks after that hardware, it’s connected to a network and amazon also look after that. Where you come is the instance it’s self that you launched, you’ll configure the security group around it, you also need to patch the software on it. It’s a pretty simple concept but one you need to understand. When it comes to S3 we have a similar story. S3 (the service) is run by amazon, this includes things like making sure all the disks are healthy and the API’s are up and running and a plethora of other things. When you create a bucket, by default it’s locked down theres no public access. In fact you have to work pretty hard to disable everything and expose your data these days! However, the data is your responsibility and this chapter has some reciepes in to help you look after that data that helps you security your data in the cloud! The diagram below shows the shared responsibility model and where you need to do your bit to protect your data.

1 - Bucket Policies

Set out what you can and can’t do within a bucket

OpEx

Sec

Rel

Perf

Cost

Sus

Bucket Policies are applied by the account owner to the bucket. Only the account owner can do this. It uses the JSON format and has a limit of 20k in size. This policy is then used to grant access to your resources. Lets have a chat about some of the terminology used for Bucket Policies first by having a look at the following elements and what they mean and refer too:

Resources: These can be buckets, objects, access points, and jobs for which you can allow or deny permissions. You refer to them by Amazon Resource Name (ARN).

Actions: Each resource type supports fined grained control actions so you can give a user the least privilege they need. In the case of S3 it could like the following example:

"s3:CreateBucket",

"s3:ListAllMyBuckets",

"s3:GetBucketLocation"

Effect: An effect is applied to the user request as an action and are in the form of Allow and Deny. If you don’r explicitly grant an Allow the default action is deny.

Principal: The account or user who is allowed access to the actions and resources in the statement. In a bucket policy its the root user of the account.

Condition: Conditions apply to policies that are being acted on and can be use to further limit or increase the scope in that particular action. An example would be:

json "Condition": { "StringEquals": { "s3:x-amz-grant-full-control": "id=AccountA-CanonicalUserID" }

There’s a great example for this in the form of you may wish to enable MultiFactorAuth when someone tries to delete an object. In the terms of a bucket policy you’d need something like the following policy:

{

"Id": "Policy1640609878106",

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Stmt1640609875204",

"Action": [

"s3:DeleteBucket",

"s3:DeleteBucketPolicy",

"s3:DeleteObject",

"s3:DeleteObjectTagging",

"s3:DeleteObjectVersion",

"s3:DeleteObjectVersionTagging"

],

"Effect": "Allow",

"Resource": "*",

"Condition": {

"ArnEquals": {

"aws:MultiFactorAuthAge": "true"

}

},

"Principal": "*"

}

]

}

Let’s take this example (chapter2/001) forward, by showing you how to add a policy. In this case, we are going to create an IAM role and then allow anyone with that role attached or anyone who can assume that role to List the S3 bucket contents. The code below enables this:

resource "aws_iam_role" "this" {

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Effect": "Allow",

"Sid": ""

}

]

}

EOF

}

data "aws_iam_policy_document" "bucket_policy" {

statement {

principals {

type = "AWS"

identifiers = [aws_iam_role.this.arn]

}

actions = [

"s3:ListBucket",

]

resources = [

"arn:aws:s3:::${local.bucket_name}",

]

}

}

Finally, let’s make sure we attach the policy to the bucket. If you check the terraform you’ll see two additional lines adding in the policy to the s3 bucket creation:

attach_policy = true

policy = data.aws_iam_policy_document.bucket_policy.json

To run this example (chapter3/002) make sure you are in the correct folder and run:

terraform init

terraform plan

terraform apply

You’ll need to type yes when prompted.

To cleanup run

And answer yes to the prompt.

Technical considerations:

You need to consider permissions very seriously and work on the principle of least privilege, start with a minimum set of permissions and grant additional permissions as necessary. Doing so is more secure than starting with permissions that are too lenient and then trying to tighten them later. You can even use the IAM Access Analyzer to monitor this.

Business Considerations:

Business leaders need to help identify whom within the company should and shouldn’t hace access, they should also be considered in what data should be made public.

2 - Bucket Permissions

Define security at the bucket level

OpEx

Sec

Rel

Perf

Cost

Sus

You can also set bucket-level permissions in S3, which can override object-level permissions that someone tries to set. This is a super quick thing to enable in terraform and if you create a bucket in the AWS console it’ll actually be defaulted to these values. Once again these things will project you from accidentally exposing data. All these tags below are pretty self-explanatory and included in the example.

# S3 bucket-level Public Access Block configuration

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

With policies you can set at a bucket level are as follows READ, WRITE, READ_ACP, WRITE_ACP and FULL_CONTROL. These can also be applied at an individual object level with the exception of WRITE which isn’t applicable. Be particularly careful with the ACP rules as these can allow a user to set your access policy.

Take a look at the following to see how these permissions map to IAM permissions: https://docs.aws.amazon.com/AmazonS3/latest/userguide/acl-overview.html#permissions

Even if you enable all available ACL options in the Amazon S3 console, the ACL alone won’t allow everyone to download objects from your bucket. However, depending on which option you select, any user could perform these actions:

- If you select List objects for the Everyone group, then anyone can get a list of objects that are in the bucket.

- If you select Write objects, then anyone can upload, overwrite, or delete objects that are in the bucket.

- If you select Read bucket permissions, then anyone can view the bucket’s ACL.

- If you select Write bucket permissions, then anyone can change the bucket’s ACL.

To prevent any accidental change to public access on a bucket’s ACL, you can configure public access settings for the bucket. If you select Block new public ACLs and uploading public objects, then users can’t add new public ACLs or upload public objects to the bucket. If you select Remove public access granted through public ACLs, then all existing or new public access granted by ACLs is respectively overridden or denied. The changes in our terraform set these permissions.

To run this example (chapter2/002) make sure you are in the correct folder and run:

terraform init

terraform plan

terraform apply

You’ll need to type yes when prompted.

To cleanup run

And answer yes to the prompt.

Technical considerations:

These policies can affect the way developers and applications can interact with the data stored in AWS. Some buckets are public for a reason, and having that knowledge and only allowing non-sensitive flagged objects in that bucket will help to keep you safe.

Business considerations:

Help the devop’s teams identify data correctly. You may work with these files on a daily basis and are the best people to say this needs protecting at all costs or this data here is fine to be public. It’s a team effort to secure the data.

3 - Enabling Encryption

Types of encryption at rest

OpEx

Sec

Rel

Perf

Cost

Sus

Just as Werner Vogals (CRO of AWS) said, dance like no one is watching, encrypt data like everyone is! So in this light, there are a few options. You could programmatically encrypt your data before you upload but this is going to require using your resources and managing your own key rotation. You can of course rely on AWS to make this easier for you. In (chapter3/004) we use code that lets AWS generate a key and manage it entirely for you. This is the default SSE-S3 method and it’s best to enable it as a rule on the bucket, take note of the line:

server_side_encryption = "AES256"

This is the part that tells AWS to use default encryption. It’s the cheapest and easiest way to encrypt at rest. You’ll also see the code at the bottom that uploads our file. Note theres nothing special on that file to say “hey lets encrypt”, it’s automatically applied at the bucket level.

To run this example (chapter2/003) make sure you are in the correct folder and run:

terraform init

terraform plan

terraform apply

You’ll need to type yes when prompted.

To cleanup run

And answer yes to the prompt.

There is another way however and this is to use AWS Key management service (KMS) this is also referred to as SSE-KMS. This allows you to manage keys on a more granular level and rotate keys when and if needed. We will first need to define a KMS key:

resource "aws_kms_key" "objects" {

description = "KMS key is used to encrypt bucket objects"

deletion_window_in_days = 7

}

The value deletion_window_in_days is how long the key is valid for. If you go over this time or delete the key you’ll lose access to that data also!!!! To use SSE-KMS slightly tweak the rule in the s3 bucket:

kms_master_key_id = aws_kms_key.objects.arn

sse_algorithm = "aws:kms"

Note that the object we upload has no changes because we are applying this at the bucket level.

To run this example (chapter3/005) make sure you are in the correct folder and run:

terraform init

terraform plan

terraform apply

You’ll need to type yes when prompted.

To cleanup run

And answer yes to the prompt.

Technical considerations

Remember if you use KMS, you must take responsibility for the management and access to that key. It’s also going to greatly increase the number of API calls to KMS and this is going to bump your costs up. Encryption also always has a cost in terms of accessing the data, there’s extra work to be done so expect that it’ll be slightly slower to retrieve an object.

Business considerations

You need to be aware that SSE-KMS whilst being the form of encryption you have the most control over is going to increase your costs. However, it may be the more suitable encryption if the data has been classified as highly sensitive. You can use different encryption on different buckets, so classify your data accordingly and pick the right encryption for you, but please do encrypt!

4 - Signed URLs

Share Files Securely

OpEx

Sec

Rel

Perf

Cost

Sus

If all objects by default are private in a bucket you are going to need a mechanisim to share files to others at some point or another. Only the object owner has permission to access these objects. However, the object owner can optionally share objects with others by creating a presigned URL and setting an expiration time on how long that key is valid for.

One way to generate signed URL’s is to run a script like the one below. Create a new file called signedurl.py and with your favourite editor add the following content:

#!/usr/bin/env python3

import boto3, argparse

parser = argparse.ArgumentParser()

parser.add_argument('-b','--bucket', help='Name of your S3 Bucket', required=True)

parser.add_argument('-o','--object', help='Name of the object + prefix in your bucket', required=True)

parser.add_argument('-t','--time', type=int, help='Expirery in seconds Default = 60', default=60)

args = vars(parser.parse_args())

def get_signed_url(time, bucket, obj):

s3 = boto3.client('s3')

url = s3.generate_presigned_url('get_object', Params = { 'Bucket': bucket, 'Key': obj }, ExpiresIn = time)

return url

try:

url = get_signed_url(args['time'],args['bucket'], args['object'])

print(url)

except:

print('Something odd happened')

You’ll need to set the permisions on the script so you can execute the file like so:

To execute the following code you need to supply -b which is the bucket name, -o which is the object and -t which is time in seconds. The example below grants access for 500 secons to a bucket named s3redirect and file called index.html

./signedurl.py -b s3redirect -o index.html -t 500

which would return you an answe like below:

https://s3redirect.s3.amazonaws.com/index.html?AWSAccessKeyId=AKIAVFTCNZXUBOD4ZTX6&Signature=gptFG4W2hCoRCWmd%2FloDtvqUonc%3D&Expires=1640724520

You can of course can do this by using the CLI directly with the following commands:

aws s3 presign s3://sqcows-bucket/<file_to_share>

aws s3 presign s3://sqcows-bucket/<file_to_share> --expires-in 300 #5mins

Technical considerations

Whilst it is an easy way to share files to people who normally have ano access this could become a cumbersome process. It would make more sense to review the users access rights and grant them proper access to the files they need. This would also prevent the signed URL being used by others.

Business considerations

The signed URL can be used by anyone who recieves the link. This opens up substantial risks for a business in the case of a signed url being used for PII data and then that URL being leaked or eroniously sent to the wrong reciepiant on email.

5 - MFA Delete

Prevent accidental deletion of objects

OpEx

Sec

Rel

Perf

Cost

Sus

It is possible to prevent accidental deletion of objects in S3. However at the time of writing this doesn’t work through the mfa_delete terraform provider, so we are going to make the call direct to the API.

Once enabled users are rewquired to enter a MFA code when they try and delete objects, this can provide extra time to think before doing something that can break things.

aws --profile <my_profile> s3api put-bucket-versioning --bucket <bucket-name> --versioning configuration 'MFADelete=Enabled,Status=Enabled' --mfa 'arn:aws:iam::<account-id>:mfa/root-account-mfa-device <mfacode>